Facebook and Instagram helped spread Covid misinformation to hundreds of thousands

Menlo Park, California - Misinformation about Covid-19 and vaccines has been allowed to spread on more than a dozen Facebook and Instagram accounts which have gained some 370,000 followers over the past year, according to a new report.

A study by NewsGuard, which monitors online misinformation, highlighted 20 accounts, pages and groups which were continuing to push conspiracy theories and false claims around Covid-19 but were allowed to remain online and gain tens of thousands of new followers.

The groups included posts claiming that Covid vaccines harm children and are generally unsafe, with many of these posts not flagged to users by the social media platforms, NewsGuard said.

The report said that, while some of the pages in question had Facebook-generated labels on them saying they contained posts about Covid-19, users were not told whether the information is trustworthy.

NewsGuard said some pages did include Facebook labels offering links to "the latest coronavirus information," but added that not enough was being done by platforms to inform users about the trustworthiness of sources being used in posts by the accounts in question to make their claims.

The monitoring group said it has been submitting reports to the World Health Organization (WHO) since September 2020, highlighting accounts, groups and pages which spread Covid misinformation and are used by the WHO in its work with social media to mitigate the harm of such claims.

But NewsGuard said its study shows that, since it began flagging these accounts, all but one of the pages has seen its follower numbers increase.

"Little or nothing" done to address the problem

Alex Cadier, UK managing director for NewsGuard and author of the report, said: "Our report shows that, even when warned repeatedly, Facebook and Instagram do not protect their users from Covid-19 and vaccine misinformation."

NewsGuard's reports to the WHO are fully disclosed, and Facebook could provide its users with transparent explanations of why so many popular sources on its platform are untrustworthy, promoting dangerous hoaxes.

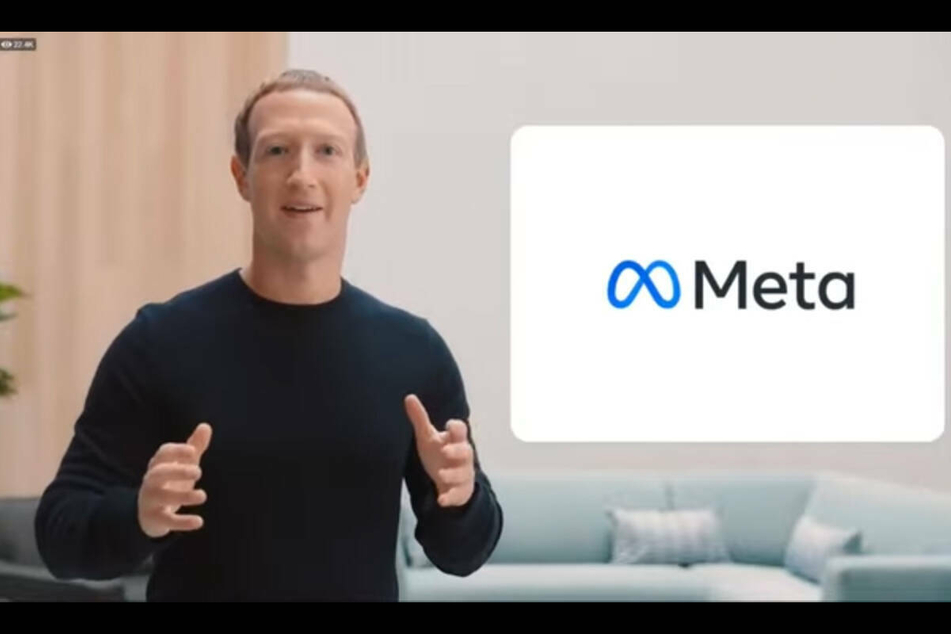

"Instead, they do little to nothing. The company's engagement-at-all-costs mantra means that viral and divisive sources of misinformation continue to flourish, despite warnings from NewsGuard and the clear danger posed to users. Facebook gave itself a new name, but their promotion of misinformation remains the same."

Last week, Facebook rebranded the company, which oversees its range of apps and services, to Meta.

In response to NewsGuard's research, a Meta spokesman said: "We're encouraging people on our platforms to get vaccinated and taking action against misinformation."

"During the pandemic, we have removed more than 20 million pieces of harmful misinformation and we've taken down content identified in this report which violates our rules."

"In total, we've now banned more than 3,000 accounts, pages and groups for repeatedly breaking our rules."

"We're also labelling all posts about the vaccines with accurate information and worked with independent fact-checkers to mark 190 million posts as false."

Cover photo: IMAGO / ZUMA Wire